1 Introduction

Magnetic Resonance Imaging (MRI) is an indispensable clinical and research tool used to diagnose and study several diseases of the human body. It has become a sine qua non in various fields of radiology, medicine, and psychiatry. Unlike computed tomography (CT), it can provide detailed images of the soft tissue and does not require any radiation, thus making it less risky to the subjects. MRI scanners sample a patient’s anatomy in the frequency domain that we will call “k-space”. The number of rows/columns that are acquired in k-space is proportional to the quality (and spatial resolution) of the reconstructed MR image. To get higher spatial resolution, longer scan time is required due to the increased number of k-space points that need to be sampled (Fessler, 2010). Hence, the subject has to stay still in the MRI scanner for the duration of the scan to avoid signal drops and motion artifacts. Many researchers have been trying to reduce the number of k-space lines to save scanning time, which leads to the well-known problem of “aliasing” as a result of the violation of the Nyquist sampling criteria (Nyquist, 1928). Reconstructing high-resolution MR images from the undersampled or corrupted measurements was a primary focus of various sparsity promoting methods, wavelet-based methods, edge-preserving methods, and low-rank based methods. This paper reviews the literature on solving the inverse problem of MR image reconstruction from noisy measurements using Deep Learning (DL) methods, while providing a brief introduction to classical optimization based methods. We shall discuss more about this in Sec. 1.1.

A DL method learns a non-linear function from a set of all possible mapping functions . The accuracy of the mapping function can be measured using some notion of a loss function . The empirical risk (Vapnik, 1991), , can be estimated as and the generalization error of a mapping function can be measured using some notion of accuracy measurement. MR image reconstruction using deep learning, in its simplest form, amounts to learning a map from the undersampled k-space measurement , or to an unaliased MR image , or , where , are the height and width of the complex valued image. In several real-world cases, higher dimensions such as time, volume, etc., are obtained and accordingly the superscripts of and change to . For the sake of simplicity, we will use assume and .

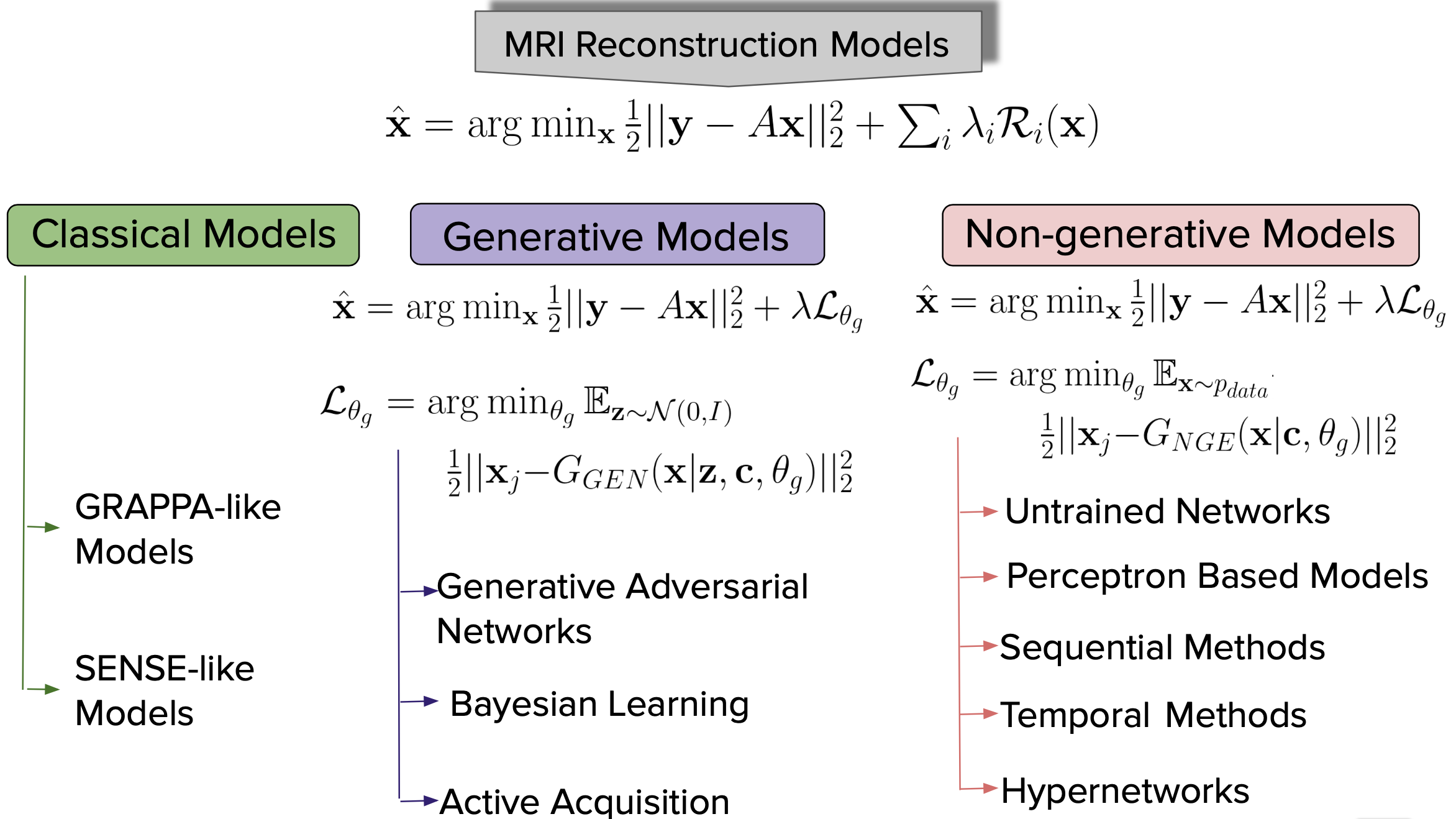

In this survey, we focus on two broad aspects of DL methods, i.e. (i) generative models, which are data generation processes capturing the underlying density of data distribution; and (ii) non-generative models, that learn complex feature representations of images intending to learn the inverse mapping from k-space measurements to MR images. Given the availability and relatively broad access to open-source platforms like Github, PyTorch (Paszke et al., 2019), and TensorFlow (Abadi et al., 2015), as well as large curated datasets and high-performance GPUs, deep learning methods are actively being pursued for solving the MR image reconstruction problem with reduced number of samples while avoiding artifacts and boosting the signal-to-noise ratio (SNR).

In Sec. 1.1, we briefly discuss the mathematical formulation that utilizes k-space measurements from multiple receiver coils to reconstruct an MR image. Furthermore, we discuss some challenges of the current reconstruction pipeline and discuss the DL methods (in Sec. 1.2) that have been introduced to address these limitations. We finally discuss the open questions and challenges to deep learning methods for MR reconstruction in sections 2.1, 2.2, and 3.

1.1 Mathematical Formulation for Image Reconstruction in Multi-coil MRI

Before discussing undersampling and the associated aliasing problem, let us first discuss the simple case of reconstructing an MR image, , from a fully sampled k-space measurement, , using the Fourier transform :

| (1) |

where is the associated measurement noise typically assumed to have a Gaussian distribution (Virtue and Lustig, 2017) when the k-space measurement is obtained from a single receiver coil.

Modern MR scanners support parallel acquisition using an array of overlapping receiver coils modulated by their sensitivities . So Eqn. 1 changes to: , where is the number of receiver coils. We use for the undersampled k-space measurement coming from the receiver coil. To speed up the data acquisition process, multiple lines of k-space data (for cartesian sampling) are skipped using a binary sampling mask that selects a subset of k-space lines from in the phase encoding direction:

| (2) |

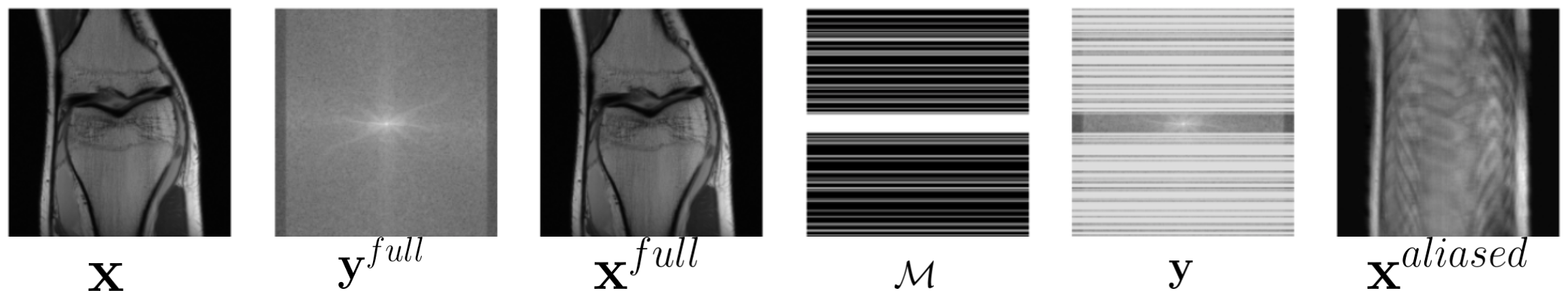

An example of , y, is shown in Fig 1.

To estimate the MR image x from the measurement, a data fidelity loss function is typically used to ensure that the estimated data is as close to the measurement as possible. A typical loss function is the squared loss, which is minimized to estimate x:

| (3) |

We borrow this particular formulation from (Sriram et al., 2020a; Zheng et al., 2019). This squared loss function is quite convenient if we wish to compute the error gradient during optimization.

However, the formulation in Eqn. 3 is under-determined if data is undersampled and does not have a unique solution. Consequently, a regularizer is typically added to solve such an ill-conditioned cost function: 111The regularization term, is related to the prior, , of a maximum a priori (MAP) extimation of x, i.e. . In fact, in Ravishankar et al. (2019) the authors loosely define , which promotes some desirable image properties such as spatial smoothness, sparsity in image space, edge preservation, etc. with a view to get a unique solution.

| (4) |

Please note that each is a separate regularizer, while the s are hyperparameters that control the properties of the reconstructed image while avoiding over-fitting. Eqn. 3 along with the regularization term can be optimized using various methods, such as (i) the Morozov formulation, ; (ii) the Ivanov formulation, i.e. ; or (iii) the Tikonov formulation, , discussed in (Oneto et al., 2016).

In general, the Tikonov formulation can be designed using a physics based, sparsity promoting, dictionary learning, or a deep learning based model. But there are several factors that can cause loss in data quality (especially small anatomical details) such as inaccurate modeling of the system noise, complexity, generalizability etc. To overcome these limitations, it is essential to develop inverse mapping methods that not only provide good data fidelity but also generalize well to unseen and unexpected data. In the next section, we shall describe how DL methods can be used as priors or regularizers for MR reconstruction.

.

1.2 Deep Learning Priors for MR Reconstruction

We begin our discussion by considering DL methods with learn-able parameters . The learn-able parameters can be trained using some notion of a learning rule that we shall discuss in Sec. 3. A DL training process helps us to find a function that acts as a regularizer to Eqn. 4 with an overarching concept of an inverse mapping, i.e; (please note that, we shall follow (Zheng et al., 2019) to develop the optimization formulation)

| (5) |

and z is a latent variable capturing the statistical regularity of the data samples, while c is a conditional random variable that depends on a number of factors such as: undersampling of the k-space (Shaul et al., 2020; Oksuz et al., 2019a; Shitrit and Raviv, 2017), the resolution of the image (Yang et al., 2017; Yuan et al., 2020), or the type of DL network used (Lee et al., 2019). Based on the nature of the learning, there are two types of DL methods known as generative models, and non-generative models. We shall start with a basic understanding of DL methods to a more in-depth study of different architectures in Secs. 4 and 5.

In generative modeling, the random variable z is typically sampled from a noisy Gaussian distribution, with or without the presence of the conditional random variable, i.e.;

| (6) |

There are various ways to learn the parameters of Eqn. 6. For instance, the Generative Adversarial Network (GAN) (Goodfellow et al., 2014) allows us to learn the generator function using an interplay between two modules, while the Variational Autoencoders (VAEs) (Kingma and Welling, 2013) learns by optimizing the evidence lower bound (ELBO), or by incorporating a prior in a Bayesian Learning setup as described in Section 4.2. It is shown in the literature that a generative model can efficiently de-alias an MR image that had undergone a or undersampling in k-space (Zbontar et al., 2018).

The non-generative models on the other hand do not learn the underlying latent representation, but instead learn a mapping from the measurement space to the image space. Hence, the random variable z is not required. The cost function for a non-generative model is given by:

| (7) |

The function is a non-generative mapping function that could be a Convolutional Neural Network (CNN) (Zheng et al., 2019; Akçakaya et al., 2019; Sriram et al., 2020b), a Long Short Term Memory (LSTM) (Hochreiter and Schmidhuber, 1997), or any other similar deep learning model. The non-generative models show a significant improvement in image reconstruction quality compared to classical methods. We shall describe the generative and the non-generative modeling based approaches in detail in Secs. 4 and 5 respectively. Below, we give a brief overview of the classical or the non-DL based approaches for MR image reconstruction.

1.3 Classical Methods for MR Reconstruction

In the literature, several approaches can be found that perform an inverse mapping to reconstruct the MR image from k-space data. Starting from analytic methods (Fessler and Sutton, 2003; Laurette et al., 1996), there are several works that provide ways to do MR reconstruction, such as the physics based image reconstruction methods (Roeloffs et al., 2016; Tran-Gia et al., 2016; Maier et al., 2019; Tran-Gia et al., 2013; Hilbert et al., 2018; Sumpf et al., 2011; Ben-Eliezer et al., 2016; Zimmermann et al., 2017; Schneider et al., 2020), the sparsity promoting compressed sensing methods (Feng and Bresler, 1996; Bresler and Feng, 1996; Candès et al., 2006), and low-rank based approaches (Haldar, 2013). All these methods can be roughly categorized into two categories, i.e. (i) GRAPPA-like methods: where prior assumptions are imposed on the k-space; and (ii) SENSE-like methods: where an image is reconstructed from the k-space while jointly unaliasing (or dealiasing) the image using sparsity promoting and/or edge preserving image regularization terms.

A few k-space methods estimate the missing measurement lines by learning kernels from an already measured set of k-space lines from the center of the k-space (i.e., the auto-calibration or ACS lines). These k-space based methods include methods such as SMASH (Sodickson, 2000), VDAUTOSMASH (Heidemann et al., 2000), GRAPPA and its variations (Bouman and Sauer, 1993; Park et al., 2005; Seiberlich et al., 2008). The k-t GRAPPA (Huang et al., 2005) takes advantage of the correlations in the k-t space and interpolates the missing data. On the other hand, sparsity promoting low rank based methods are based on the assumption that, when the image reconstruction follows a set of constraints (such as sparsity, smoothness, parallel imaging, etc.), the resultant k-space should follow a structure that has low rank. The low rank assumption has been shown to be quite successful in dynamic MRI (Liang, 2007), functional MRI (Singh et al., 2015), and diffusion MRI (Hu et al., 2019). In this paper we give an overview of the low-rank matrix approaches (Haldar, 2013; Jin et al., 2016; Lee et al., 2016; Ongie and Jacob, 2016; Haldar and Zhuo, 2016; Haldar and Kim, 2017) in Sec. 2.1. While in k-t SLR (Lingala et al., 2011), a spatio-temporal total variation norm is used to recover the dynamic signal matrix.

The image space based reconstruction methods, such as, the model based image reconstruction algorithms, incorporate the underlying physics of the imaging system and leverage image priors such as neighborhood information (e.g. total-variation based sparsity, or edge preserving assumptions) during image reconstruction. Another class of works investigated the use of compressed sensing (CS) in MR reconstruction after its huge success in signal processing (Feng and Bresler, 1996; Bresler and Feng, 1996; Candès et al., 2006). Compressed sensing requires incoherent sampling and sparsity in the transform domain (Fourier, Wavelet, Ridgelet or any other basis) for nonlinear image reconstruction. We also describe dictionary learning based approaches that are a spacial case of compressed sensing using an overcomplete dictionary. The methods described in (Gleichman and Eldar, 2011; Ravishankar and Bresler, 2016; Lingala and Jacob, 2013; Rathi et al., 2011; Michailovich et al., 2011) show various ways to estimate the image and the dictionary from limited measurements.

1.4 Main Highlights of This Literature Survey

The main contributions of this paper are:

- •

We give a holistic overview of MR reconstruction methods, that includes a family of classical k-space based image reconstruction methods as well as the latest developments using deep learning methods.

- •

We provide a discussion of the basic DL tools such as activation functions, loss functions, and network architecture, and provide a systematic insight into generative modeling and non-generative modeling based MR image reconstruction methods and discuss the advantages and limitations of each method.

- •

We compare eleven methods that includes classical, non-DL and DL methods on fastMRI dataset and provide qualitative and quantitative results in Sec. 7.

- •

We conclude the paper with a discussion on the open issues for the adoption of deep learning methods for MR reconstruction and the potential directions for improving the current state-of-the-art methods.

2 Classical Methods for Parallel Imaging

This section reviews some of the classical k-space based MR image reconstruction methods and the classical image space based MR image reconstruction methods.

2.1 Inverse Mapping using k-space Interpolation

Classical k-space based reconstruction methods are largely based on the premise that the missing k-space lines can be interpolated (or extrapolated) based on a weighted combination of all acquired k-space measurement lines. For example, in the SMASH (Sodickson, 2000) method, the missing k-space lines are estimated using the spatial harmonics of order . The k-space signal can then be written as:

| (8) |

where, are the spatial harmonics of order , is the minimum k-space interval ( stands for field-of-view), and is the k-space measurement of an image x, are the co-ordinates in k-space along the phase encoding (PE) and frequency encoding (FE) directions, and represents the imaginary number. From Eqn. 8, one can note that the -line of k-space can be generated using -number of spatial harmonics and hence we can estimate convolution kernels to approximate the missing k-space lines from the acquired k-space lines. So, in SMASH a k-space measurement x, also known as a composite signal in common parlance, is basically a linear combination of number of component signals (k-space measurements) coming from receiver coils modulated by their sensitivities , i.e.

| (9) |

We borrow the mathematical notation from (Sodickson, 2000) and represent the composite signal in Eqn. 9 as follows:

| (10) |

However, SMASH requires the exact estimation of the sensitivity of the receiver coils to accurately solve the reconstruction problem.

To address this limitation, AUTO-SMASH (Jakob et al., 1998) assumed the existence of a fully sampled block of k-space lines called autocalibration lines (ACS) at the center of the k-space (the low frequency region) and relaxed the requirement of the exact estimation of receiver coil sensitivities. The AUTO-SMASH formulation can be written as:

| (11) |

We note that the AUTO-SMASH paper theoretically proved that it can learn a kernel that is, , that is, it can learn a linear shift invariant convolutional kernel to interpolate missing k-space lines from the knowledge of the fully sampled k-space lines of ACS region. The variable density AUTO-SMASH (VD-AUTO-SMASH) (Heidemann et al., 2000) further improved the reconstruction process by acquiring multiple ACS lines in the center of the k-space. The composite signal x is estimated by adding each individual component by deriving linear weights and thereby estimating the missing k-space lines. The more popular, generalized auto calibrating partially parallel acquisitions (GRAPPA) (Bouman and Sauer, 1993) method uses this flavour of VD-AUTO-SMASH, i.e. the shift-invariant linear interpolation relationships in k-space, to learn the coefficients of a convolutional kernel from the ACS lines. The missing k-space are estimated as a linear combination of observed k-space points coming from all receiver coils. The weights of the convolution kernel are estimated as follows: a portion of the k-space lines in the ACS region are artificially masked to get a simulated set of acquired k-space points and missing k-space points . Using the acquired k-space lines , we can estimate the weights of the GRAPPA convolution kernel by minimizing the following cost function:

| (12) |

where represents the convolution operation. The GRAPPA method has shown very good results for uniform undersampling, and is the method used in product sequences by Siemens and GE scanners. There are also recent methods (Xu et al., 2018; Chang et al., 2012) that show ways to learn non-linear weights of a GRAPPA kernel.

The GRAPPA method regresses k-space lines from a learned kernel without assuming any specific image reconstruction constraint such as sparsity, limited support, or smooth phase as discussed in (Kim et al., 2019). On the other hand, low-rank based methods assume an association between the reconstructed image and the k-space structure, thus implying that the convolution-structured Hankel or Toeplitz matrices leveraged from the k-space measurements must show a distinct null-space vector association with the kernel. As a result, any low-rank recovery algorithm can be used for image reconstruction. The simultaneous autocalibrating and k-space estimation (SAKE) (Shin et al., 2014) algorithm used the block Hankel form of the local neighborhood in k-space across all coils for image reconstruction. Instead of using correlations across multiple coils, the low-rank matrix modeling of local k-space neighborhoods (LORAKS) (Haldar, 2013) utilized the image phase constraint and finite image support (in image space) to produce very good image reconstruction quality. The LORAKS method does not require any explicit calibration of k-space samples and can work well even if some of the constraints such as sparsity, limited support, and smooth phase are not strictly satisfied. The AC-LORAKS (Haldar, 2015) improved the performance of LORAKS by assuming access to the ACS measurements, i.e.:

| (13) |

where is a mapping function that transforms the k-space measurement to a structured low-rank matrix, and the matrix is the null space matrix. The mapping basically takes care of the constraints such as sparsity, limited support, and smooth phase. In the PRUNO (Zhang et al., 2011) method, the mapping only imposes limited support and parallel imaging constraints. On the other hand, the number of nullspace vectors in is set to 1 in the SPIRiT method (Lustig and Pauly, 2010). The ALOHA method (Lee et al., 2016) uses the weighted k-space along with transform-domain sparsity of the image. Different from them, the method of (Otazo et al., 2015) uses a spatio-temporal regularization.

2.2 Image Space Rectification based Methods

These methods directly estimate the image from k-space by imposing prior knowledge about the properties of the image (e.g., spatial smoothness). Leveraging image prior through linear interpolation works well in practice but largely suffers from sub-optimal solutions and as a result the practical cone beam algorithm (Laurette et al., 1996) was introduced that improves image quality in such a scenario. The sensitivity encoding (SENSE) method (Pruessmann et al., 1999) is an image unfolding method that unfolds the periodic repetitions from the knowledge of the coil. In SENSE, the signal in a pixel location is a weighted sum of coil sensitivities, i.e.;

| (14) |

where is the height of image , is the number of coils, and is the coil sensitivity of the coil. The is the kth coil image that has aliased pixels at a certain position, and is a particular row and is the column index counting from the top of the image to the bottom. The is the sensitivity matrix that assembles the corresponding sensitivity values of the coils at the locations of the involved pixels in the full FOV image x. The coil images , the sensitivity matrix , and the image x in Eqn. 14 can be re-written as;

| (15) |

By knowing the complex sensitivities at the corresponding positions, we can compute the generalized inverse of the sensitivity matrix:

| (16) |

Please note that, represents the complex coil image values at the chosen pixel and has length . In k-t SENSE and k-t BLAST (Tsao et al., 2003) the information about the spatio-temporal support is obtained from the training dataset that helps to reduce aliasing.

The physics based methods allow statistical modeling instead of simple geometric modeling present in classical methods and reconstruct the MR images using the underlying physics of the imaging system (Roeloffs et al., 2016; Tran-Gia et al., 2016; Maier et al., 2019; Tran-Gia et al., 2013; Hilbert et al., 2018; Sumpf et al., 2011; Ben-Eliezer et al., 2016; Zimmermann et al., 2017; Schneider et al., 2020). These types of methods sometimes use very simplistic anatomical knowledge based priors (Chen et al., 1991; Gindi et al., 1993; Cao and Levin, 1997) or “pixel neighborhood” (Szeliski, 2010) information via a Markov Random Field based regularization (Sacco, 1990; Besag, 1986).

A potential function based regularization takes the form , where the function, , could be a hyperbolic, Gaussian (Bouman and Sauer, 1993) or any edge-preserving function (Thibault et al., 2007). The Total Variation (TV) could also be thought of as one such potential function. The (Rasch et al., 2018) shows a variational approach for the reconstruction of subsampled dynamic MR data, which combines smooth, temporal regularization with spatial total variation regularization.

Different from Total Variation (TV) approaches, (Bostan et al., 2012) proposed a stochastic modeling approach that is based on the solution of a stochastic differential equation (SDE) driven by non-Gaussian noise. Such stochastic modeling approaches promote the use of nonquadratic regularization functionals by tying them to some generative, continuous-domain signal model.

The Compressed Sensing (CS) based methods impose sparsity in the image domain by modifying Eqn. 2 to the following:

| (17) |

where is an operator that makes x sparse. The norm is used to promote sparsity in the transform or image domain. The norm minimization can be pursued using a basis pursuit or greedy algorithm (Boyd et al., 2004). However, use of non-convex quasi-norms (Chartrand, 2007; Zhao and Hu, 2008; Chartrand and Staneva, 2008; Saab et al., 2008) show an increase in robustness to noise and image non-sparsity. The structured sparsity theory (Boyer et al., 2019) shows that only measurements are sufficient to reconstruct MR images when -sparse data with size are given. The kt-SPARSE approach of (Lustig et al., 2006) uses a spatio-temporal regularization for high SNR reconstruction.

Iterative sparsity based methods (Ravishankar and Bresler, 2012; Liu et al., 2015, 2016) assume that the image can be expressed as a linear combination of the columns (atoms) from a dictionary such that and h is the coefficient vector. Hence Eqn. 4 becomes:

| (18) |

The SOUP-DIL method (Bruckstein et al., 2009) uses an exact block coordinate descent scheme for optimization while a few methods (Chen et al., 2008; Lauzier et al., 2012; Chen et al., 2008) assume to have a prior image , i.e. , to optimize Eqn. 4. The method in (Caballero et al., 2014) optimally sparsifies the spatio-temporal data by training an overcomplete basis of atoms.

The method in (Hongyi Gu, 2021) shows a DL based approach to leverage wavelets for reconstruction.

The transform based methods are a generalization of the CS approach that assumes a sparse approximation of the image along with a regularization of the transform itself, i.e., , where h is the sparse representation of x and is the modeling error. The method in (Ravishankar and Bresler, 2015) proposed a regularization as follows:

| (19) |

where is the transform regularizer. In this context, the STROLLR method (Wen et al., 2018) used a global and a local regularizer.

In general, Eqn. 5 is a non-convex function and cannot be optimized directly with gradient descent update rules. The unrolled optimization algorithm procedure decouples the data consistency term and the regularization term by leveraging variable splitting in Eqn 4 as follows:

| (20) |

where the regularization is decoupled using a quadratic penalty on x and an auxiliary random variable z. Eqn 20 is optimized via alternate minimization of

| (21) |

and the data consistency term:

| (22) |

where the are the intermediate variables at iteration . The alternating direction method of multiplier networks (ADMM net) introduce a set of intermediate variables, , and eventually we have a set of dictionaries, , such that, , collectively promote sparsity. The basic ADMM net update (Yang et al., 2018) is as follows:

| (23) |

where can be any sparsity promoting operator and is called a multiplier. The iterative shrinkage thresholding algorithm (ISTA) solves this CS optimization problem as follows:

| (24) |

Later in this paper, we shall show how ISTA and ADMM can be organically used within the modern DL techniques in Sec. 5.3.

3 Review of Deep Learning Building Blocks

In this section, we will describe basic building blocks that are individually or collectively used to develop complex DL methods that work in practice. Any DL method, by design, has three major components: the network structure, the training process, and the dataset on which the DL method is trained and tested. We shall discuss each one of them below in detail.

3.1 Various Deep Learning Frameworks

Perceptron: The journey of DL started in the year 1943 when Pitts and McCulloch (McCulloch and Pitts, 1943) gave the mathematical model of a biological neuron. This mathematical model is based on the “all or none” behavioral dogma of a biological neuron. Soon after, Rosenblatt provided the perceptron learning algorithm (Rosenblatt, 1957) which is a mathematical model based on the behaviour of a neuron. The perceptron resembles the structure of a neuron with dendrites, axons and a cell body. The basic perceptron is a binary classification algorithm of the following form:

| (25) |

where ’s are the components of an image vector x, ’s are the corresponding weights that determine the slope of the classification line, and is the bias term. This setup collectively resembles the “all or none” working principle of a neuron. However, in the famous book of Minsky and Papert (Minsky and Papert, 2017) called “Perpetron” it was shown that the perceptron can’t classify non-separable points such as an exclusive-OR (XOR) function.

Multilayer Perceptron (MLP): It was understood that the non-separability problem of perceptron can be overcome by a multilayer perceptron (Minsky and Papert, 2017) but the research stalled due to the unavailability of a proper training rule. In the year 1986, Rumelhart et al. (Rumelhart et al., 1986) proposed the famous “backpropagation algorithm” that provided fresh air to the study of neural network. A Multilayer Perceptron (MLP) uses several layers of multiple perceptrons to perform nonlinear classification. A MLP is comprised of an input layer, an output layer, and several densely connected in-between layers called hidden layers:

| (26) |

Along with the hidden layers and the input-output layer, the MLP learns features of the input dataset and uses them to perform classification. The dense connection among hidden layers, input and output layers often creates a major computational bottleneck when the input dimension is very high.

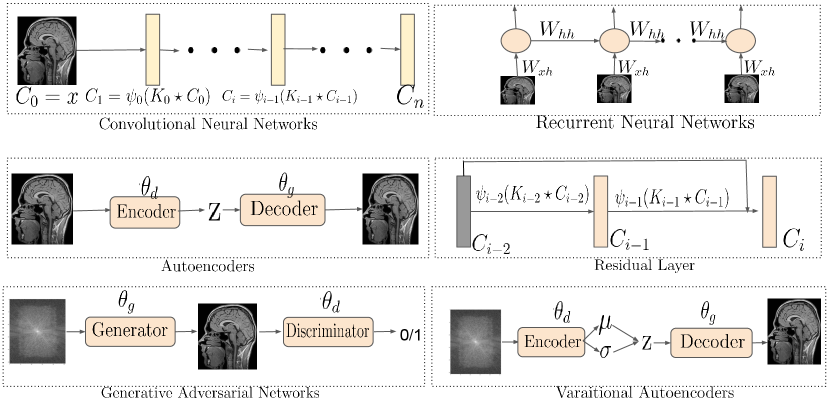

Neocognitron or the Convolutional Neural Network (CNN): The dense connection (also known as global connection) of a MLP was too flexible a model and prone to overfitting and sometimes had large computational overhead. To cope with this situation, a local sliding window based network with shared weights was proposed in early 1980s called neocognitron network (Fukushima and Miyake, 1982) and later popularized as Convolutional Neural Network (CNN) in the year 1998 (LeCun et al., 1989). Similar to the formulation of (Liang et al., 2020a), we write the feedforward process of a CNN as follows:

| (27) |

where is the hidden layer comprised of -number of feature maps each of size , is the kernel that performs a convolution operation on , and are activation functions to promote non-linearity. We show a vanilla kernel operation in Eqn. 27. Please note that, the layers of the CNN can either be a fully connected dense layer, a max-pooling layer that downsizes the input, or a dropout layer to perform regularization that is not shown in Eqn. 27.

Recurrent Neural Networks (RNNs): A CNN can learn hidden features of a dataset using its inherent deep structure and local connectivities through a convolution kernel. But they are not capable of learning the time dependence in signals. The recurrent neural network (RNN) (Rumelhart et al., 1986) at its basic form is a time series neural network with the following form:

| (28) |

where is the time and the RNN takes the input x in a sequential manner. However, the RNN suffers from the problem of “vanishing gradient”. The vanishing gradient is observed when gradients from output layer of a RNN trained with gradient based optimization method changes parameter values by a very small amount, thus effecting no change in parameter learning. The Long Short Term Memory (LSTM) network (Hochreiter and Schmidhuber, 1997) uses memory gates, and sigmoid and/or tanh activation function and later ReLU activation function (see Sec. 3.2 for activation functions) to control the gradient signals and overcome the vanishing gradient problem.

Transformer Networks: Although the LSTM has seen tremendous success in DL and MR reconstruction, there are a few problems associated with the LSTM model (Vaswani et al., 2017) such as: (i) the LSTM networks perform a sequential processing of input; and (ii) the short attention span of the hidden states that may not learn a good contextual representation of input. Such shortcomings are largely mitigated using a recent advancement called the Transformer Network (Vaswani et al., 2017). A transformer network has a self-attention mechanism222Self-attention: The attention mechanism provides a way to know which part of the input is to be given more focus. The self-attention, on the other hand, measures the contextual relation of an input by allowing it to interact with itself. Let’s assume that we are at layer that has number of channels and number of locations on a feature map. We get two feature vectors, and , by transforming the layer to a vector (typically done with a convolution). The contextual similarity of these to vectors and is measured by; The output is , where , and , . Here, the are learnable matrices that collectively provide the self-attention vector for a given layer . , a positional embedding and a non-sequential input processing setup and empirically this configuration outperforms the LSTM networks by a large margin (Vaswani et al., 2017).

3.2 Activation Functions

The activation function, , operates on a node or a layer of a neural network and provides a boolean output, probabilistic output or output within a range. The step activation function was proposed by McCulloch & Pitts in (McCulloch and Pitts, 1943) with the following form: and 0 otherwise. Several initial works also used the hyperbolic tangent function, , as an activation function that provides value within the range . The sigmoid activation function, , is a very common choice and provides only positive values within the range . However, one major disadvantage of the sigmoid activation function is that its derivative, , quickly saturates to zero which leads to the vanishing gradient problem. This problem was addressed by adding a Rectified Linear Unit (ReLu) to the network (Brownlee, 2019), with the derivative or 0 elsewhere.

3.3 Network Structures

The VGG Network: In late 2015, Zisserman et al. published their seminal paper with the title “very deep convolutional networks for large-scale image recognition” (VGG) (Simonyan and Zisserman, 2014) that presents a 16-layered network called VGG network. Each layer of the VGG network has an increasing number of channels. The network was shown to achieve state-of-the-art level performance in Computer Vision tasks such as classification, recognition, etc.

The ResNet Model: A recently developed model called Residual Networks or ResNet (He et al., 2016), modifies the layer interaction shown in Eqn. 27 to the following form, , and provides a “shortcut connection” to the hidden layers. The identity mapping using the shortcut connections has a large positive impact on the performance and stability of the networks.

UNet: A UNet architecture (Ronneberger et al., 2015) was proposed to perform image segmentation task in biomedical images. The total end-to-end architecture pictorially resembles the english letter “U” and has a encoder module and a decoder module. Each encoder layer is comprised of unpadded convolution, a rectified linear unit (ReLU in Sec. 3.2), and a pooling layer, which collectively downsample the image to some latent space. The decoder has the same number of layers as the encoder. Each decoder layer upsamples the data from its previous layer until the input dimension is reached. This architecture has been shown to provide good quantitative results on several datasets.

Autoencoders: The autoencoders (AE) are a type of machine learning models that capture the patterns or regularities of input data samples in an unsupervised fashion by mapping the target values to be equal to the input values (i.e. identity mapping). For example, given a data point x randomly sampled from the training data distribution , a standard AE learns a low-dimensional representation z using an encoder network, , that is parameterized by . The low-dimensional representation z, also called the latent representation, is subsequently projected back to the input dimension using a decoder network, , that is parameterized by . The model parameters, i.e. , are trained using the standard back propagation algorithm with the following optimization objective:

| (29) |

From a Bayesian learning perspective, an AE learns a posterior density, , using the encoder network, , and a decoder network, . The vanilla autoencoder, in a way, can be thought of as a non-linear principal component analysis (PCA) (Hinton and Salakhutdinov, 2006) that progressively reduces the input dimension using the encoder network and finds regularities or patterns in the data samples.

Variational Autoencoder Networks: Variational Autoecoder (VAE) is basically an autoencoder network that is comprised of an encoder network that estimates the posterior distribution and the inference with a decoder network . However, the posterior is intractable333Intractability: We can learn the density of the latent representation from the data points themselves, i.e. , by expanding the Bayes theorem of conditional distribution. In this equation, the numerator is computed for a single realization of data points. However, the denominator is the marginal distribution of data points, , which are complex and hard to estimate; thus, leading to intractability of estimating the posterior. and several methods have been proposed to approximate the inference using techniques such as the Metropolis-Hasting (Metropolis et al., 1953) and variational inference (VI) algorithms (Kingma and Welling, 2013). The main essence of VI algorithm is to estimate an intractable probability density, i.e. in our case, from a class of tractable probability densities, , with an objective to finding a density, , almost similar to . We then sample from the tractable density instead of to get an approximate estimation, i.e.

| (30) |

Here, is the KL divergence (Kullback and Leibler, 1951). The VI algorithm, however, typically never converges to the global optimal solution but provides a very fast approximation. The VAE consists of three components: (i) the encoder network that observes and encodes a data point x from the training dataset and provides the mean and variance of the approximate posterior, i.e. from a batch of data points ; (ii) a prior distribution , typically an isotropic Gaussian from which z is sampled, and (iii) a generator network that generates data points given a sample from the latent space z. The VAE, however, cannot directly optimize the VI, i.e. , as the KL divergence requires an estimate of the intractable density , i.e. . As a result, VAE estimates the evidence lower bound (ELBO) that is similar to KL divergence, i.e.:

| (31) |

Since , optimizing Eqn. (31) provides a good approximation of the marginal density . Please note that, Eqn. 31 is similar to Eqn. 4 where the first term in Eqn. 31 is the data consistency term and the KL divergenece term acts as a regularizer.

Generative Adversarial Networks: A vanilla Generative Adversarial Networks (GAN) setup, by design, is an interplay between two neural networks called the generator, that is , and the discriminator parameterized by and respectively. The samples the latent vector and generates . While the discriminator, on the other hand, takes x (or ) as input and provides a decision on x being sampled from a real data distribution or from . The parameters are trained using a game-theoretic adversarial objective, i.e.:

| (32) |

As the training progresses, the generator progressively learns a strategy to generate realistic looking images, while the discriminator learns to discriminate the generated and real samples.

3.4 Loss Functions

In Sec. 1.1, we mentioned the loss function, , that estimates the empirical loss and the generalization error. Loss functions that are typically used in MR image reconstruction using DL methods are the Mean Squared Error (MSE), Peak Signal to Noise Ratio (PSNR) and the Structural Similarity Loss function (SSIM), or an loss to optimize Eqns. 3, 4, 5. The MSE loss between an image x and its noisy approximation is defined as, , where is the number of samples. The root MSE (RMSE) is essentially the squared root of the MSE, . The loss, , provides the absolute difference and is typically used as a regularization term to promote sparsity. The PSNR is defined using the MSE, PSNR, where is the highest pixel value attained by the image x. The PSNR metric captures how strongly the noise in the data affects the fidelity of the approximation with respect to the maximum possible strength of the signal (hence the name peak signal to noise ratio). The main concern with and PSNR is that they penalize large deviations much more than smaller ones (Zhao et al., 2016) (e.g., outliers are penalized more than smaller anatomical details). The SSIM loss for a pixel in the image x and the approximation captures the perceptual similarity of two images: SSIM, here are two constants, and is the mean and is the standard deviation.

The VGG loss: It is shown in (Johnson et al., 2016) that the deeper layer feature maps (feature maps are discussed in Eqn. 27) of a VGG-16 network, i.e. a VGG network that has 16 layers, can be used to compare perceptual similarity of images. Let us assume that, the layer of a VGG network has distinct feature maps each of size . The matrix , stores the activations of the filter at position of layer . Then, the method computes feature correlation using: , where any conveys the activation of the filter at position in layer . The correlation is considered as a VGG-loss function.

4 Inverse Mapping using Deep Generative Models

Based on how the generator network, , is optimized in Eqn. 6, we get different manifestations of deep generative networks such as Generative Adversarial Networks, Bayesian Networks, etc. In this section, we shall discuss specifics on how these networks are used in MR reconstruction.

4.1 Generative Adversarial Networks (GANs)

We gave a brief introduction to GAN in Sec. 3.3 and in this section we shall take a closer look on the different GAN methods used to learn the inverse mapping from k-space measurements to the MR image space.

Inverse Mapping from k-space: The current GAN based k-space methods can be broadly classified into two categories: (i) methods that directly operate on the k-space y and reconstruct the image x by learning a non-linear mapping and (ii) methods that impute the missing k-space lines in the undersampled k-space measurements. In the following paragraphs, we first discuss the recent GAN based direct k-space to MR image generation methods followed by the undersampled k-space to full k-space generation methods.

The direct k-space to image space reconstruction methods, as shown in Fig. 3 (a), are based on the premise that the missing k-space lines can be estimated from the acquired k-space lines provided we have a good non-linear interpolation function, i.e.;

| (33) |

This GAN framework was used in (Oksuz et al., 2018) for correcting motion artifacts in cardiac imaging using a generic AUTOMAP network444AUTOMAP (Zhu et al., 2018): This is a two stage network resembling the unrolled optimization like methods (Schlemper et al., 2017). The first sub network ensures the data consistency, while, the other sub network helps in refinement of the image. The flexibility of AUTOMAP enables it to learn the k-space to image space mapping from alternate domains instead of strictly from a paired k-space to MR image training dataset.. Such AUTOMAP-like generator architectures not only improve the reconstruction quality but help in other downstream tasks such as MR image segmentation (Oksuz et al., 2019a, 2020, b). However, while the “AUTOMAP as generator” based methods solve the broader problem of motion artifacts, but they largely fail to solve the banding artifacts along the phase encoding direction. To address this problem, a method called MRI Banding Removal via Adversarial Training (Defazio et al., 2020) leverages a perceptual loss along with the discriminator loss in Eqn. 32. The perceptual loss ensures data consistency, while, the discriminator loss checks whether: (i) the generated image has a horizontal () or a vertical () banding; and (ii) the generated image resembles the real image or not. With a 4x acceleration, a 12-layered UNet generator and a ResNet discriminator, the methodology has shown remarkable improvements (Defazio et al., 2020) on fastMRI dataset.

Instead of leveraging the k-space regularization within the parameter space of a GAN (Oksuz et al., 2018, 2019a), the k-space data imputation using GAN directly operates on the k-space measurements to regularize Eqn. 32. To elaborate, these type of methods estimate the missing k-space lines by learning a non-linear interpolation function (similar to GRAPPA) within an adversarial learning framework, i.e.

| (34) |

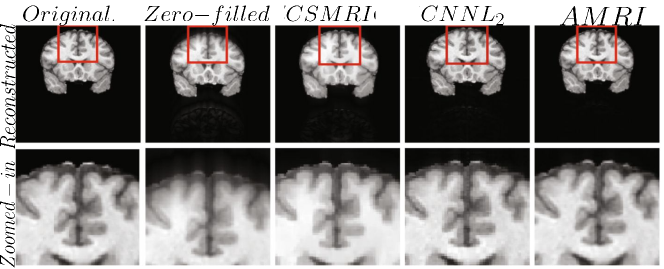

The accelerated magnetic resonance imaging (AMRI) by adversarial neural network method (Shitrit and Raviv, 2017) aims to generate the missing k-space lines, from using a conditional GAN, . The combined is Fourier transformed and passed to the discriminator. The AMRI method showed improved PSNR value with good reconstruction quality and no significant artifacts as shown in Fig. 4.

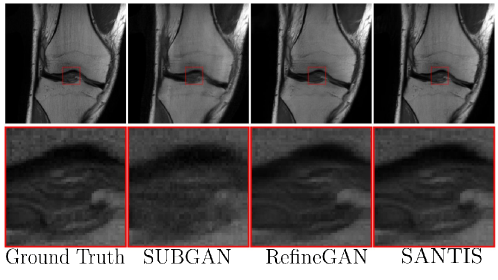

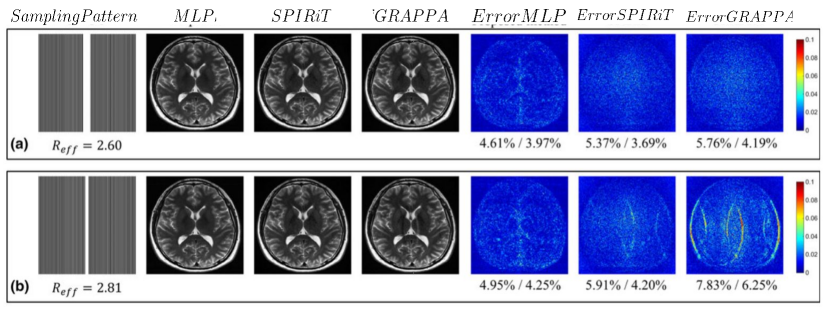

Later, in subsampled brain MRI reconstruction by generative adversarial neural networks method (SUBGAN) (Shaul et al., 2020), the authors discussed the importance of temporal context and how that mitigates the noise associated with the target’s movement. The UNet-based generator in SUBGAN takes three adjacent subsampled k-space slices taken at timestamps and provides the reconstructed image. The method achieved a performance boost of in PSNR with respect to the other state-of-the-art GAN methods while considering of the original k-space samples on IXI dataset (Rowland et al., 2004). We also show reconstruction quality of SUBGAN on fastMRI dataset in Fig. 5. Another method called multi-channel GAN (Zhang et al., 2018b) advocates the use raw of k-space measurements from all coils and has shown good k-space reconstruction and lower background noise compared to classical parallel imaging methods like GRAPPA and SPIRiT. However, we note that this method achieved dB lower PSNR than the GRAPPA and SPIRiT methods.

Despite their success in MR reconstruction from feasible sampling patterns of k-space, the previous models we have discussed so far have the following limitations: (i) they need unaliased images for training, (ii) they need paired k-space and image space data, or (iii) the need fully sampled k-space data. In contrast, we note a recent work called unsupervised MR reconstruction with GANs (Cole et al., 2020) that only requires the undersampled k-space data coming from the receiver coils and optimizes a network for image reconstruction. Different from AutomapGAN (Oksuz et al., 2019a), in this setup the generator provides the undersampled k-space (instead of the MR image as in case of AutomapGAN) after applying Fourier transform, sensitivity encoding and a random-sampling mask on the generated image, i.e. . The discriminator takes the k-space measurements instead of an MR image and provides thee learned signal to the generator.

Image space Rectification Methods: Image space rectification methods operate on the image space and learn to reduce noise and/or aliasing artifacts by updating Eqn. 4 to the following form:

| (35) |

The GAN framework for deep de-aliasing (Yang et al., 2017) regularizes the reconstruction by adopting several image priors such as: (i) image content information like object boundary, shape, and orientation, along with using a perceptual loss function: , (ii) data consistency is ensured using a frequency domain loss , and (iii) a VGG loss (see Sec. 3.4) which enforces semantic similarity between the reconstructed and the ground truth images. The method demonstrated a dB improvement in PSNR score on IXI dataset with 30% undersampling. However, it was observed that the finer details were lost during the process of de-aliasing with a CNN based GAN network. In the paper called self-attention and relative average discriminator based GAN (SARAGAN) (Yuan et al., 2020), the authors show that fine details tend to fade away due to smaller size of the convolution kernels, leading to poor performance. Consequently, the SARAGAN method adopts a relativistic discriminator (Jolicoeur-Martineau, 2018) along with a self-attention network (see Sec. 3.1 for self-attention) to optimize the following equation that is different from Eqn. 35:

| (36) |

where, is the sigmoid activation function discussed in Sec. 3.2. This method showed excellent performance in the MICCAI 2013 grand challenge brain MRI reconstruction dataset and got an SSIM of and PSNR of with sampling rate. Among other methods, sparsity based constraints are imposed as a regularizer to Eqn. 35 in compressed sensing GAN (GANCS) (Mardani et al., 2018a), RefineGAN (Quan et al., 2018), and the structure preserving GAN (Deora et al., 2020; Lee et al., 2018) methods. Some qualitative results using the RefineGAN method are shown in Fig. 5. On the other hand, methods like PIC-GAN (Lv et al., 2021) and (MGAN) (Zhang et al., 2018a) use a SENSE-like reconstruction strategy that combines MR images reconstructed from parallel receiver coils using a GAN framework. Such methods have also shown good performance with low normalized mean squared error in the knee dataset.

Combined k-space and image space methods: Thus far, we have discussed k-space (GRAPPA-like GAN methods) and image space (SENSE-like GAN methods) MR reconstruction methods that work in isolation. However, both these strategies can be combined together to leverage the advantages of both methods. Recently, a method called sampling augmented neural network with incoherent structure for MR image reconstruction (SANTIS) (Liu et al., 2019) was proposed that leverages a cycle consistency loss, in addition to a GAN loss, i.e.,

| (37) |

where the function is another generator network that projects back the MR image to k-space. The method achieved an SSIM value of on the 4x undersampled knee FastMRI dataset (see Fig. 5 and Table 1). In the collaborative GAN method (CollaGAN) (Lee et al., 2019), instead of cycle consistency between k-space and the image domain from a single image, they consider a collection of domains such as T1-weighted and T2-weighted data and try to reconstruct the MR images with cycle consistency in all domains. The InverseGAN (Narnhofer et al., 2019) method performs cycle consistency using a single network that learns both the forward and inverse mapping from and to k-space.

4.2 Bayesian Learning

Bayes’s theorem expresses the posterior as a function of the k-space data likelihood, and the prior with the form also known as the “product-of-the-experts” in DL literature. In (Tezcan et al., 2018), the prior is estimated with a Monte Carlo sampling technique which is computationally intensive. To overcome the computational cost, several authors have proposed to learn a non-linear mapping from undersampled k-space to image space using VAEs. In these VAE based methods (Tezcan et al., 2019; Gaillochet et al., 2020; Van Essen et al., 2012), the networks are trained on image patches obtained from k-space measurement and the VAE network is optimized using these patches with the following cost function: ,

| (38) |

These methods have mainly been evaluated on the Human Connectome Project (HCP) (Van Essen et al., 2012) dataset and have shown good performance on 4x undersampled images (see Fig 6).

Different from them, PixelCNN+ considers each pixel as random variable and estimates the joint distribution of pixels over an image x as the product of conditional distribution, i.e. . The method proposed in (Luo et al., 2020) considers a generative regression model called PixelCNN+ (Oord et al., 2016) to estimate the prior . This method demonstrated very good performance, i.e. they achieved more than 3 dB PSNR improvement than the current state-of-the-art methods like GRAPPA, variational networks (see Sec. 5.3) and SPIRIT algorithms. The Recurrent Inference Machines (RIM) for accelerated MRI reconstruction (Lonning et al., 2018) is an general inverse problem solver that performs a step-wise reassessments of the maximum a posteriori and infers the inverse transform of a forward model. Despite showing good results, the overall computational cost and running time is very high compared to GAN or VAE based methods.

4.3 Active Acquisition Methods

Combined k-space and image methods: All of the above methods consider a fixed k-space sampling that is predetermined by the user. This sampling process is isolated from the reconstruction pipeline. Recent works have investigated if the sampling process itself can be included as a part of the reconstruction optimization framework. A basic overview of these works can be described as follows:

- •

The algorithm has access to the fully sampled training MR images

- •

The encoder, , learns the sampling pattern by optimizing parameter .

- •

The decoder, , is the reconstruction algorithm that is parameterized by

- •

The encoder is optimized by minimizing the empirical risk on the training MR images, , where is some arbitrary loss of the decoder.

This strategy was used in LOUPE (Bahadir et al., 2019) where a network was learnt to optimize the under-sampling pattern such that provided a probabilistic sampling mask assuming each line in k-space as an independent Bernoulli random variable by optimizing:

| (39) |

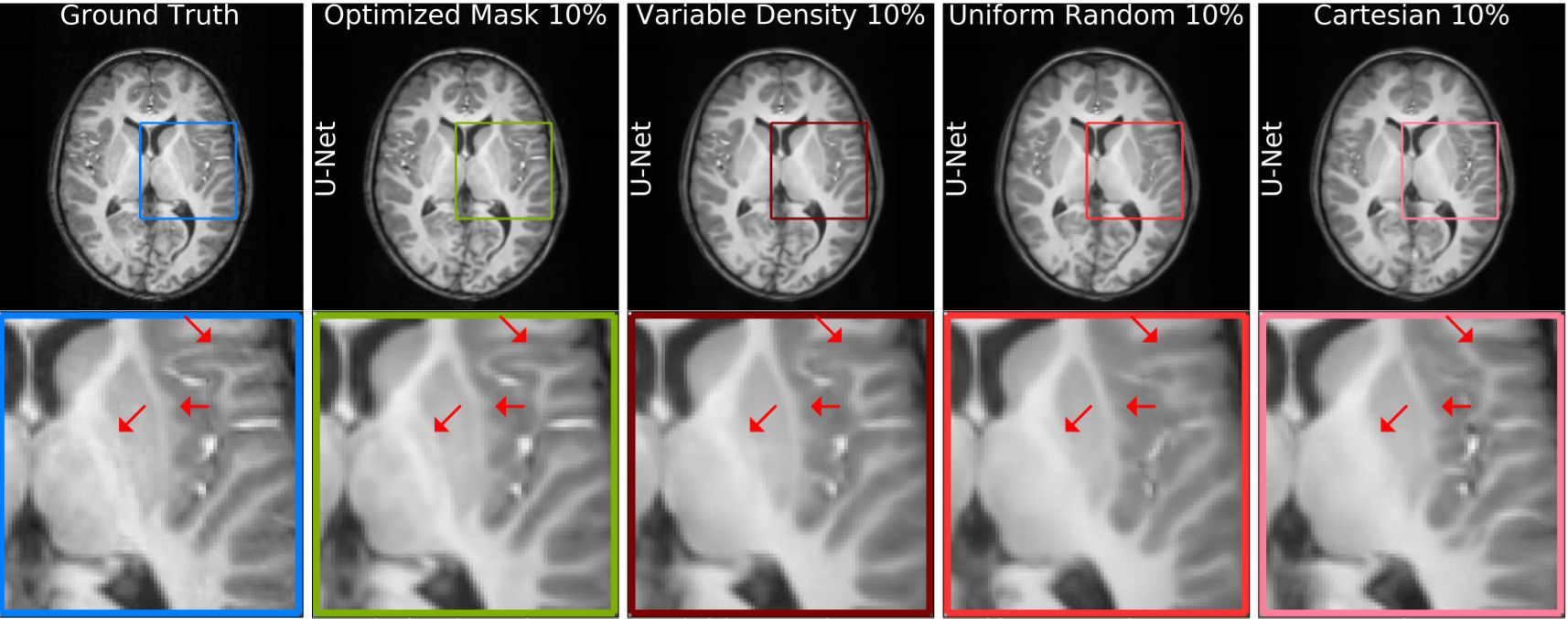

where is an anti-aliasing deep neural network. Experiments on -weighted structural brain MRI scans show that the LOUPE method improves PSNR by with respect to the state-of-the-art methods that is shown in Fig. 7, second column.

A follow-up work to LOUPE (Bahadir et al., 2020) imposed a hard sparsity constraint on to ensure robustness to noise. In the deep active acquisition method (Zhang et al., 2019b), is termed the evaluator and is the reconstruction network. Given a zero-filled MR image, provides the reconstructed image and the uncertainty map. The evaluator decomposes the reconstructed image and ground truth image into spectral maps and provides a score to each k-space line of the reconstructed image. Based on the scores, the methodology decides to acquire the appropriate k-space locations from the MR scanner. The Deep Probabilistic Subsampling (DPS) method in (Huijben et al., 2019) develops a task-adaptive probabilistic undersampling scheme using a softmax based approach followed by MR reconstruction. On the other hand, the work on joint model based deep learning (J-MoDL) (Aggarwal and Jacob, 2020) optimized both sampling and reconstruction using Eqns 21 and 22 to jointly optimize a data consistency network and a regularization network. The data consistency network is a residual network that acts as a denoiser, while the regularization network decides the sampling scheme. The PILOT (Weiss et al., 2021) method also jointly optimizes the k-space sampling and the reconstruction. The network has a sub-sampling layer to decide the importance of a k-space line, while the regridding and the task layer jointly reconstruct the image. The optimal k-space lines are chosen either using the greedy traveling salesman problem or imposing acquisition machine constraints. Joint optimization of k-space sampling and reconstruction also appeared in recent methods such as (Heng Yu, 2021; Guanxiong Luo, 2021).

5 Inverse Mapping using Non-generative Models

In this section we discuss non-generative models that use the following optimization framework:

| (40) |

The non-generative models also have a data consistency term and a regularization term similar to Eqn. 4. As discussed earlier in section 1.2, however, the non-generative models do not assume any underlying distribution of the data and learn the inverse mapping by parameter optimization using Eqn. 7. Below, we discuss the different types of non-generative models.

5.1 Perceptron Based Models

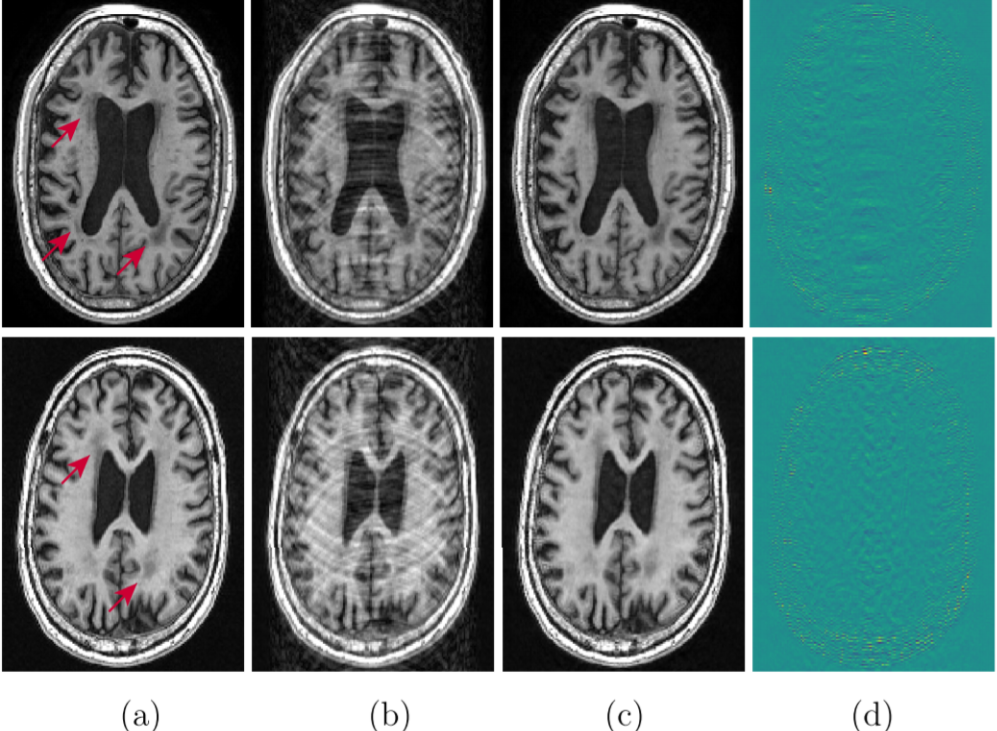

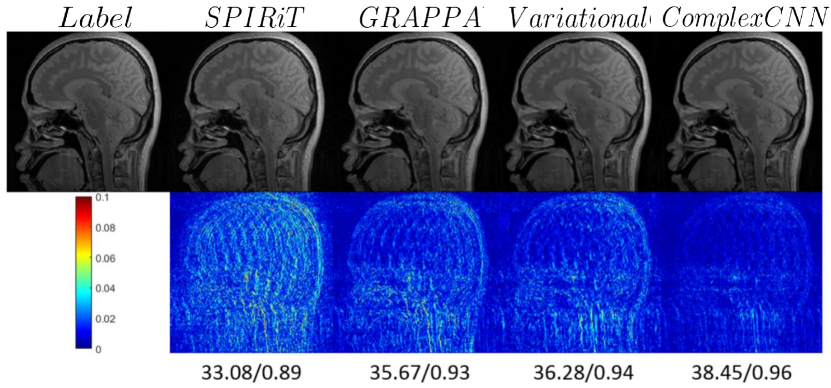

The work in (Kwon et al., 2017; Cohen et al., 2018) developed a multi-level perceptron (MLP) based learning technique that learns a nonlinear relationship between the k-space measurements, the aliased images, and the desired unaliased images. The input to an MLP is the real and imaginary part of an aliased image and the k-space measurement, and the output is the corresponding unaliased image. We show a visual comparison of this method (Kwon et al., 2017) with the SPIRiT and GRAPPA methods in Fig. 8. This method showed better performance with lower RMSE at different undersampling factors.

5.2 Untrained Networks

So far, we have talked about various deep leraning architectures and their training strategies using a given training dataset. The most exciting question one can ask “is it always necessary to train a DL network to obtain the best result at test time?”, or “can we solve the inverse problem using DL similar to classical methods that do not necessarily require a training phase to learn the parameter priors”? We note several state-of-the-art methods that uses ACS lines or other k-space lines of the k-space measurement to train a DL network instead of an MR image as a ground truth. The robust artificial neural network for k-space interpolation (RAKI) (Akçakaya et al., 2019) trains a CNN by using the ACS lines. The RAKI methodology shares some commonality with GRAPPA. However, the main distinction is the linear estimation of the convolution kernel in GRAPPA which is replaced with a non-linear kernel in CNN. The CNN kernels are optimized using the following objective function:

| (41) |

where, are the acquired ACS lines, and is the CNN network that performs MRI reconstruction. The RAKI method has shown improvement in RMSE score with respect to GRAPPA on phantom images at acceleration factors respectively. A followup work called residual RAKI (rRAKI) (Zhang et al., 2019a) improves the RMSE score with the help of a residual network structure. The LORAKI (Kim et al., 2019) method is based on the low rank assumption of LORAKS (Haldar, 2013). It uses a recurrent CNN network to combine the auto-calibrated LORAKS (Haldar, 2013) and the RAKI (Akçakaya et al., 2019) methods. On five different slices of a -weighted dataset, the LORAKI method has shown good improvement in SSIM scores compared to GRAPPA, RAKI, AC-LORAKS among others. Later, the sRAKI-RNN (Hosseini et al., 2019b) method proposed a unified framework that performs regularization through calibration and data consistency using a more simplified RNN network than LORAKI.

Deep Image Prior (DIP) and its variants (Ulyanov et al., 2018; Cheng et al., 2019; Gandelsman et al., 2019) have shown outstanding results on computer vision tasks such as denoising, in-painting, super resolution, domain translation etc. A vanilla DIP network uses a randomly weighted autoencoder, , that reconstructs a clean image given a fixed noise vector . The network is optimized using the “ground truth” noisy image . A manual or user chosen “early stopping” of the optimization is required as optimization until convergence overfits to noise in the image. A recent work called Deep Decoder (Heckel and Hand, 2018) shows that an under-parameterized decoder network, , is not expressive enough to learn the high frequency components such as noise and can nicely approximate the denoised version of the image. The Deep Decoder uses pixel-wise linear combinations of channels and shared weights in spatial dimensions that collectively help it to learn relationships and characteristics of nearby pixels. It has been recently understood that such advancements can directly be applied to MR image reconstruction (Mohammad Zalbagi Darestani, 2021). Given a set of k-space measurements from receiver coils, an un-trained network uses an iterative first order method to estimate parameters by optimizing;

| (42) |

The network is initialized with random weight and then optimized using Eqn. 42 to obtain . The work in (Dave Van Veen, 2021) introduces a feature map regularization: , in Eqn. 42 where matches the features of layer. This term encourages fidelity between the network’s intermediate representations and the acquired k-space measurements. The works in (Heckel, 2019; Heckel and Soltanolkotabi, 2020) provide theoretical guarantees on recovery of image from the k-space measurements. Recently proposed method termed “Scan-Specific Artifact Reduction in k-space” or SPARK (Arefeen et al., 2021) trains a CNN to estimate and correct k-space errors made by an input reconstruction technique. The results of this method are also quite impressive given that only ACS lines are used for training the CNN. Along similar lines, the authors in (Yoo et al., 2021) used the Deep Image Prior setup for dynamic MRI reconstruction.

In the self supervised approach, a subset of the undersampled k-space lines are typically used to validate the DL network in addition to the acquired undersampled k-space lines that are used to optimize the network. Work in this direction divides the total k-space lines into two portions:(i) k-space lines for data consistency and (ii) k-space lines for regularization. In (Yaman et al., 2020), the authors use a multi-fold validation set of k-space data to optimize the DL network. Other methods such as SRAKI (Hosseini et al., 2020, 2019a) use the self-supervision to reconstruct the images. A deep Reinforcement learning based approach is studied in (Jin et al., 2019) that deploys a reconstruction network and an active acquisition network. Another method such as (Yaman et al., 2021b) provides an unrolled optimization algorithm to estimate the missing k-space lines. Other methods that fall under this umbrella include the transformer based method (Korkmaz et al., 2021) and a scan specific optimization method (Yaman et al., 2021a; Tamir et al., 2019).

5.3 Convolutional Neural Networks

Spatial models are mostly dominated by the various flavours of CNNs such as complex-valued CNN (Wang et al., 2020b; Cole et al., 2019), unrolled optimization using CNN (Schlemper et al., 2017), variational networks (Hammernik et al., 2018), etc. Depending on how the MR images are reconstructed, we divide all CNN based spatial methods into the following categories.

Inverse mapping from k-space: The AUTOMAP (Zhu et al., 2018) learns a reconstruction mapping using a network having three fully connected layers (3 FCs) and two convolutional layers (2 Convs) with an input dimension of . Any image of size more than is cropped and subsampled to . The final model yielded a PSNR of 28.2 on FastMRI knee dataset outperforming the previous validation baseline of 25.9 on the same dataset. Different from these methods, there are a few works (Wang et al., 2020b; Cole et al., 2019) that have used CNN networks with complex valued kernels to reconstruct MR images from complex valued k-space measurements. The method in (Wang et al., 2020a) uses a complex valued ResNet (that is a type of CNN) network and is shown to obtain good results on 12-channel fully sampled k-space dataset (see Fig. 9 for a visual comparison with other methods). Another method uses a Laplacian pyramid-based complex neural network (Liang et al., 2020b) for MR image reconstruction.

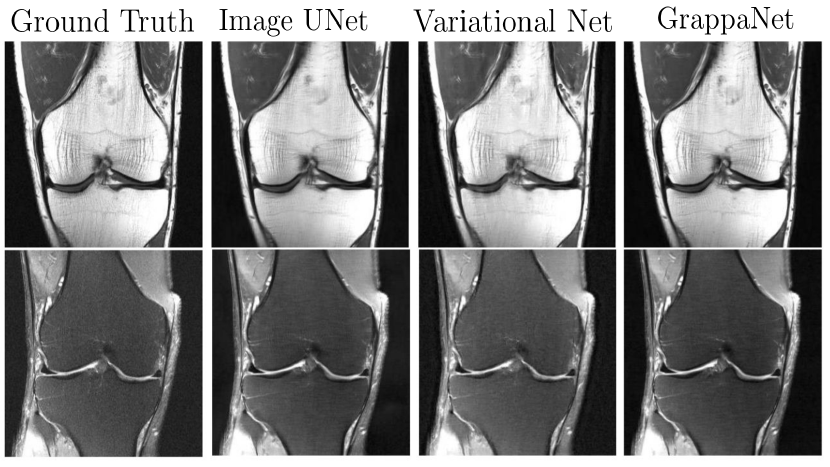

Inverse Mapping for Image Rectification: In CNN based sequential spatial models such as DeepADMM net models (Sun et al., 2016; Schlemper et al., 2017) and Deep Cascade CNN (DCCNN) (Schlemper et al., 2017), the regularization is done in image space using the following set of equations:

| (43) |

Here, and are Lagrange multipliers. The ISTA net (Zhang and Ghanem, 2018) modifies the above image update rule as follows, , using a CNN network . Note that the DeepADMM network demonstrated good performance when the network was trained on brain data but tested on chest data. Later, MODL (Aggarwal et al., 2019) proposed a model based MRI reconstruction where they used a convolution neural network (CNN) based regularization prior. Later, a dynamic MRI using MODL based deep learning was proposed by (Biswas et al., 2019). The optimization, i.e. , denoises the alias artifacts and noise using a CNN network as a regularization prior, and is a trainable parameter. To address this concern, a full end-to-end CNN model called GrappaNet (Sriram et al., 2020b) was developed, which is a nonlinear version of GRAPPA set within a CNN network. The CNN network has two sub networks; the first sub network, , fills the missing k-space lines using a non-linear CNN based interpolation function similar to GRAPPA. Subsequently, a second network, , maps the filled k-space measurement to the image space. The GrappaNet model has shown excellent performance ( PSNR, SSIM) on the FastMRI dataset and is one of the best performing methods. A qualitative comparison is shown in Fig. 10.

Along similar lines, a deep variational network (Hammernik et al., 2018) is used to MRI reconstruction. Other works, such as (Wang et al., 2016; Cheng et al., 2018; Aggarwal et al., 2018) train the parameters of a deep network by minimizing the reconstruction error between the image from zero-filled k-space and the image from fully sampled k-space. The cascaded CNN network learns spatio-temporal correlations efficiently by combining convolution and data sharing approaches in (Schlemper et al., 2017). The (Seegoolam et al., 2019) method proposed to use a CNN network to estimate motions from undersampled MRI sequences that is used to fuse data along the entire temporal axis.

5.4 Recurrent Neural Networks

Inverse mapping from k-space: We note that a majority of the iterative temporal networks, a.k.a the recurrent neural network models, are k-space to image space reconstruction methods and typically follow the optimization described in Section 5.3. The temporal methods, by design, are classified into two categories, namely (i) regularization methods, and (ii) variable splitting methods.

Several state-of-the-art methods have considered temporal methods as a way to regularize using the iterative hard threshold (IHT) method from (Blumensath and Davies, 2009) that approximates the norm. Mathematically, the IHT update rule is as follows:

| (44) |

where is the step-size parameter, is the operator that sets all but -largest values to zero (proxy for operation), and the dictionary satisfies the restricted isometry property (RIP)555Restricted Isometry Property (RIP): The projection from the measurement matrix in Eqn. 17 should preserve the distance between two MR images and bounded by factors of and , i.e. , where is a small constant.. The work in (Xin et al., 2016) shows that this hard threshold operator resembles the memory state of the LSTM network. Similar to the clustering based sparsity pattern of IHT, the gates of LSTM inherently promotes sparsity. Along similar lines, the Neural Proximal Gradient Descent work (Mardani et al., 2018b) envisioned a one-to-one correspondence between the proximal gradient descent operation and the update of a RNN network. Mathematically, an iteration of a proximal operator given by: , resembles the LSTM update rule:

| (45) |

where is the update step, is the hidden state and is a dictionary. Different from these, a local-global recurrent neural network is proposed in (Guo et al., 2021) that uses two recurrent networks, one network to capture high frequency components, and another network to capture the low frequency components. The method in (Oh et al., 2021) uses a bidirectional RNN and replaces the dense network structure of (Zhu et al., 2018) while removing aliasing artifacts in the reconstructed image.

The Convolutional Recurrent Neural Networks or CRNN (Qin et al., 2018) method proposed a variable splitting and alternate minimisation method using a RNN based model. Recovering finer details was the main challenge of the PyramidRNN (Wang et al., 2019) that proposed to reconstruct images at multiple scales. Three CRNNs are deployed to reconstruct images at different scales, i.e. , and the final data consistency is performed after are combined using another CNN. The CRNN is used as a recurrent neural network in the variational approach of VariationNET (Sriram et al., 2020a), i.e.

| (46) |

where is a CRNN network that provides MR reconstruction. In this unrolled optimization method, the CRNN is used as a proximal operator to reconstruct the MR image. The VariationNET is a followup work of Deep Variational Network of (Hammernik et al., 2018) that we discussed in Sec. 5.3. The VariationalNET unrolls an iterative algorithm involving a CRNN based recurrent neural network based regularizer, while the Deep Variational Network of (Hammernik et al., 2018) unrolls an iterative algorithm involving a receptive field based convolutional regularizer.

5.5 Hypernetwork Models

Hypernetworks are meta-networks that regress optimal weights of a task network (often called as data network (Pal and Balasubramanian, 2019) or main network (Ha et al., 2016)). The data network performs the mapping from aliased or the low resolution images to the high resolution MR images . The hypernetwork estimates weights of the network given the random variable sampled from a prior distribution . The end-to-end network is trained by optimizing:

| (47) |

In (Wang et al., 2021), the prior distribution is a uniform distribution (and the process is called Uniform Hyperparameter Sampling) or the sampling can be based on the data density (called data-driven hyperparameter sampling). Along similar lines, the work in (Ramanarayanan et al., 2020) trained a dynamic weight predictor (DWP) network that provides layer wise weights to the data network. The DWP generates the layer wise weights given the context vector that comprises of three factors such as the anatomy under study, undersampling mask pattern and the acceleration factor.

6 Comparison of state-of-the-art methods

| Acceleration | Model | NMSE | PSNR | SSIM | Acceleration | Model | NMSE | PSNR | SSIM |

|---|---|---|---|---|---|---|---|---|---|

| 4-fold | Zero Filled | 0.0198 | 32.51 | 0.811 | 8-fold | Zero Filled | 0.0352 | 29.60 | 0.642 |

| SENSE (Pruessmann et al., 1999) | 0.0154 | 32.79 | 0.816 | SENSE (Pruessmann et al., 1999) | 0.0261 | 31.65 | 0.762 | ||

| GRAPPA (Griswold et al., 2002) | 0.0104 | 27.79 | 0.816 | GRAPPA(Griswold et al., 2002) | 0.0202 | 25.31 | 0.782 | ||

| RfineGAN(Quan et al., 2018) | 0.0138 | 34.00 | 0.901 | RefineGAN(Quan et al., 2018) | 0.0221 | 32.09 | 0.792 | ||

| DeepADMM (Sun et al., 2016) | 0.0055 | 34.52 | 0.895 | DeepADMM(Sun et al., 2016) | 0.0201 | 36.37 | 0.810 | ||

| LORAKI (Kim et al., 2019) | 0.0091 | 35.41 | 0.871 | LORAKI (Kim et al., 2019) | 0.0181 | 36.45 | 0.882 | ||

| VariationNET (Sriram et al., 2020a) | 0.0049 | 38.82 | 0.919 | VariationNET (Sriram et al., 2020a) | 0.0211 | 36.63 | 0.788 | ||

| GrappaNet (Sriram et al., 2020b) | 0.0026 | 40.74 | 0.957 | GrappaNet (Sriram et al., 2020b) | 0.0071 | 36.76 | 0.922 | ||

| J-MoDL (Aggarwal and Jacob, 2020) | 0.0021 | 41.53 | 0.961 | J-MoDL (Aggarwal and Jacob, 2020) | 0.0065 | 35.08 | 0.928 | ||

| Deep Decoder (Heckel and Hand, 2018) | 0.0132 | 31.67 | 0.938 | Deep Decoder (Heckel and Hand, 2018) | 0.0079 | 29.654 | 0.929 | ||

| DIP (Ulyanov et al., 2018) | 0.0113 | 30.46 | 0.923 | DIP (Ulyanov et al., 2018) | 0.0076 | 29.18 | 0.912 |

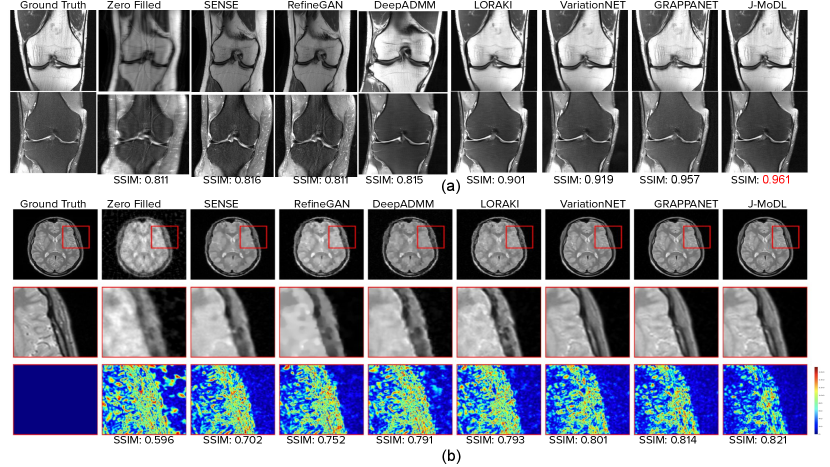

Given the large number of DL methods being proposed, it is imperative to compare these methods on a standard publicly available dataset. Many of these methods have shown their effectiveness on various real world datasets using different quantitative metrics such as SSIM, PSNR, RMSE, etc. There is, however, a scarcity of qualitative and quantitative comparison of these methods on a single dataset. While the fastMRI challenge allowed comparison of several methods, yet, several recent methods from the categories discussed above were not part of the challenge. Consequently, we compare a few representative MR reconstruction methods both qualitatively and quantitatively on the fastMRI knee dataset (Zbontar et al., 2018). We note that, doing a comprehensive comparison of all the methods mentioned in this review is not feasible due to non-availability of the code as well as the sheer magnitude of the number of methods (running into hundreds). We compared the following representative models:

- •

Zero filled image reconstruction method

- •

Classical image space based SENSE method (Pruessmann et al., 1999)

- •

Classical k-space based GRAPPA method (Griswold et al., 2002)

- •

Unrolled optimization based method called DeepADMM (Sun et al., 2016)

- •

Low rank based LORAKI (Kim et al., 2019)

- •

Generative adversarial network based RefineGAN (Quan et al., 2018) network

- •

Variational network called VariationNET (Sriram et al., 2020a)

- •

The deep k-space method GrappaNet (Sriram et al., 2020b)

- •

Active acquisition based method J-MoDL (Aggarwal and Jacob, 2020)

- •

Untrained network model Deep Decoder (Heckel and Hand, 2018) and

- •

Deep Image Prior DIP (Ulyanov et al., 2018) method.

The fastMRI knee dataset consists of raw k-space data from 1594 scans acquired on four different MRI machines. We used the official training, validation and test data split in our experiments. We did not use images with a width greater than 372 and we note that such data is only of the training data split. Both the 4x and 8x acceleration factors were evaluated.

We used the original implementation 666 Below are the official implementations of various methods we discussed:

VariationaNET: https://github.com/VLOGroup/mri-variationalnetwork/

GrappaNET: https://github.com/facebookresearch/fastMRI.git

RefineGAN: https://github.com/tmquan/RefineGAN.git

DeepADMM: https://github.com/yangyan92/Deep-ADMM-Net.git

SENSE, GRAPPA: https://mrirecon.github.io/bart/

Deep Decoder: https://github.com/MLI-lab/ConvDecoder.git of GrappaNet, VariationaNET, SENSE, GRAPPA, DeepADMM, Deep Decoder, and DIP method. Similar to GrappaNET, we always use the central 30 k-space lines to compute the training target. Treating the real and imaginary as two distinct channels, we dealt with the complex valued input, i.e. we have 30 channel input k-space measurements for the 15-coil complex-valued k-space. Where applicable, the models were trained with a linear combination of and SSIM loss, i.e.

| (48) |

where is a hyperparameter, is the model prediction, and x is the ground truth.

Quantitative results are shown in Table 1 for several metrics such as NMSE, PSNR, and SSIM scores. We observe that GrappaNET, J-MoDL and VariationNET outperformed the baseline methods by a large margin. We note that the zero-filled and SENSE reconstructions in Fig 11 (a), (b) show a large amount of over-smoothing. The reconstruction of SENSE and zero-filled model also lack a majority of the high frequency detail that is clinically relevant, but fine details are visible in case of GrappaNET, VariationNET, J-MoDL, and RefineGAN methods. The comparable performance of Deep Decoder and DIP advocates the importance of letting untrained neural network figure out how to perform k-space to MR image reconstruction. The J-MoDL method makes heavy use of training data and the joint optimization of k-space lines and the reconstruction of MR images to get good results both for and as shown in Table 1. On the other hand, the Deep Decoder and DIP methods achieve good performance using untrained networks as discussed in Sec. 5.2, which is advantageous as it generalizes to any MR reconstruction scenario.

7 Discussion

In this paper, we discussed and reviewed several classical reconstruction methods, as well as deep generative and non-generative methods to learn the inverse mapping from k-space to image space. Naturally, one might ask the following questions given the review of several papers above: “are DL methods free from errors?”, “do they always generalize well?”, and “are they robust?”. To better understand the above mentioned rhetorical questions, we need to discuss several aspects of the performance of these methods such as (i) correct reconstruction of minute details of pathology and anatomical structures; (ii) risk quantification; (iii) robustness; (iv) running time complexity; and (v) generalization.

Due to the blackbox-like nature of DL methods, the reliability and risk quantification associated with them are often questioned. In a recent paper on “risk quantification in Deep MRI reconstruction” (Edupuganti et al., 2020), the authors strongly suggest for quantifying the risk and reliability of DL methods and note that it is very important for accurate patient diagnoses and real world deployment. The paper also shows how Stein’s Unbiased Risk Estimator (SURE) (Metzler et al., 2018) can be used as a way to assess uncertainty of the DL model:

| (49) |

where the second term represents the end-to-end network sensitivity to small input perturbations. This formulation works even when there is no access to the ground truth data x. In this way, we can successfully measure the risk associated with a DL model. In addition to the SURE based method, there are a few other ways to quantify the risk and reliability associated with a DL model. The work “On instabilities of deep learning in image reconstruction” (Antun et al., 2019) uses a set of pretrained models such as AUTOMAP (Zhu et al., 2018), DAGAN (Yang et al., 2017), or Variational Network (Sriram et al., 2020a) with noisy measurements to quantify their stability. This paper as well as several others (Narnhofer et al., 2021; Antun et al., 2019) have discussed how the stability of the reconstruction process is related to the network architecture, training set and also the subsampling pattern.

Whether a DL method can capture high frequency components or not is also another area of active research in MR reconstruction. The robustness of a DL based MR reconstruction method is also studied in various papers such as (Raj et al., 2020; Cheng et al., 2020a; Calivá et al., 2020; Zhang et al., 2021). For example, the (Cheng et al., 2020a; Calivá et al., 2020; Cheng et al., 2020b) works perceived adversarial attack as a way to capture minute details during sMR reconstruction thus showing a significant improvement in robustness compared to other methods. The work proposed to train a deep learning network with a loss that pays special attention to small anatomical details. The methodology progressively adds minuscule perturbation to the input not perceivable to human eye but may shift the decision of a DL system. The method in (Raj et al., 2020) uses a generative adversarial framework that entails a perturbation generator network to add minuscule distortion on k-space measurement . The work in (Zhang et al., 2021) proposed to incorporate fast gradient based attack on a zero filled input image and train the deep learning network not to deviate much under such attack. The FINE (Zhang et al., 2020) methodology, on the other hand, has used pre-trained recon network that was fine-tuned using data consistency to reduce generalization error inside unseen pathologies. Please refer to the paper (Darestani et al., 2021) which provides a summary of robustness of different approaches for image reconstruction.